What Creating Video With AI Actually Takes.

Key Takeaways

Creating AI videos is a workflow: Treat AI video creation like a toolchain, not a one-step generation process. Images, video, and the final edit each need the right tool and a plan.

The “Starting Frame Rule” unlocks consistency: Lock a still image you can defend as the initial frame for each scene (wardrobe, lighting, set, camera angle), then animate forward. Don’t generate video from scratch and hope it stays coherent.

Structured prompting beats “creative prompting”: Use a repeatable format (style, camera, must-keep details, negative prompts, timing) so generations stay consistent, controlled, and are easier to troubleshoot when tools glitch.

Generate in short takes, not long scenes: Working in 6–8 second chunks gives you control, reduces drift, and lets you edit like traditional coverage (A-cam / B-cam rhythm still matters).

AI doesn’t eliminate work, it relocates it: Costs can drop (less travel/crew/locations), but time shifts into prep, iteration, selection, and editorial craft. It’s not a shortcut. It’s a different production model.

AI video works best when you have a clear plan: Start with a clear strategy, concept, and storyboard. Use AI for world-building moments that would be VFX-heavy in traditional production. AI works best when “impossible” visuals serve a real message.

“Send Our CEO To 2030.”

The brief was specific: create a video with AI for Curi Capital's year-end meeting.

The premise: the company’s CEO, Dimitri Eliopoulos, would deliver a message from the future, reporting from 2030 on how AI has transformed the wealth management industry, and what hasn’t changed.

The irony wasn't subtle. A financial advisory firm built on human trust and deep client relationships, asking us to make their leader's digital twin talk about technology's proper place in a relationship business.

We've worked with this client for over twenty years. When you know an organization that long – through market cycles, dramatic growth, and brand evolutions – you stop guessing what fits. You know. And this fit. But it also raised the stakes. Unlike brands testing AI for product demos or imaginative holiday commercials, we had to put a real person's face and voice into an AI-generated environment and make it feel seamless. If it looked cheap, creepy, or like we were trying too hard, it would undermine the exact message Curi Capital wanted to send.

We're Greenhouse Studios, the content studio within Greenhouse Partners, a Boulder, Colorado-based brand strategy, advertising, and content marketing firm. We develop everything from brand positioning to creative campaigns to video and written content. And for several years, we've been working with AI tools selectively, strategically, only when they serve the work rather than the other way around. We knew the landscape. We knew the pitfalls.

But a long-form video made entirely with AI was different. It would test everything we'd learned.

What We Knew Going In

If you've never made videos using generative AI, here's what you're probably imagining: type a prompt, get back a finished video, post it.

Here's the reality: creating videos with AI is a toolchain that looks more like VFX production than one-step video generation. You're not replacing production. You're reassembling it from different parts that don't always want to play nice together.

The central problem: consistency.

Generate two eight-second clips from the same prompt and you'll get two different versions of reality. Different lighting. Different details. Sometimes a different person. This is where most AI video falls apart – that slightly uncanny quality where something feels off every few seconds. The face morphs. The background drifts. Your brain knows something's wrong even if you can't name it.

We'd learned this the hard way on earlier projects. If you can't solve consistency, you don't ship.

The solution wasn't in the AI. It was in the prep.

The Starting Frame Rule

You don't start with motion. You start with a single frame you can defend.

We art-directed still images of Dimitri in a future scene – wardrobe, lighting, set design, camera angle, everything locked. We used Midjourney and Nano Banana paired with human fine-tuning in Adobe to build and refine these frames. We treated each frame the way a DP treats a lighting reference. Then we animated forward from that frame instead of generating video from scratch.

This became our version of cinematography. Instead of choosing a lens on set, we chose an aesthetic in still form and protected it with constraints. The prompts became direction: don't change his face structure, keep the lighting from camera left, no weird hand movements, maintain this exact background.

It worked. Mostly.

First attempt: Dimitri in side profile, turning toward camera. As his head rotated, he morphed into a different person entirely. Take 31: he started waving his hands like he was conducting an invisible orchestra. Take 47: the futuristic flying cars we'd placed outside the window decided to take a detour through the room.

This is what "entirely AI-driven" actually means. Iteration until you want to throw your laptop into a lake, followed by one perfect eight-second clip that makes you forget the previous 46 attempts existed.

We generated in short bursts, six to eight seconds, for two reasons. First, control. Avoiding the unwanted improvisations and hallucinations that emerge in longer generations. Second, the reality of tools like Veo-3.1, which have hard limits on generation time. We weren't making "a video." We were filming scenes, the same way you'd shoot traditional video, then editing it together into something that felt whole.

When the System Breaks

Midway through production, Veo-3 stopped working.

Generating videos with audio is still a beta feature, and it simply stopped producing any audio at all. For hours. Then it started coming back with gibberish. We'd feed a clear script and structured prompt, and in the resulting video Dimitri would be talking in tongues, with only a line or two of the script delivered cleanly:

Hallucinations. This is the reality of AI production. It's not perfect. It requires patience.

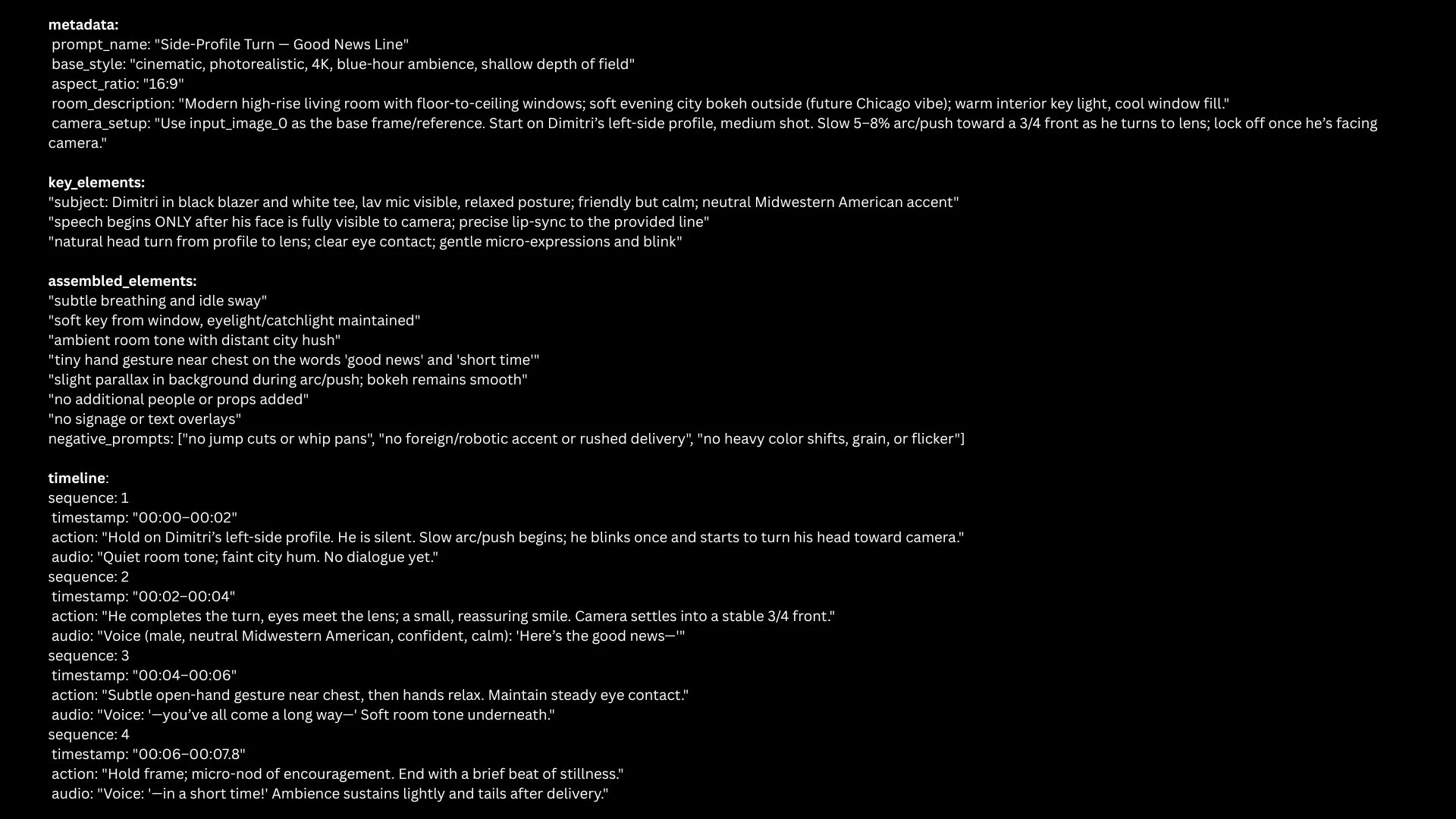

This is why we'd built a structured prompting system from the start. Every prompt followed the same format: metadata and style parameters, detailed environment and camera descriptions, specific elements that must remain consistent, negative prompts defining what must not happen, and a timeline breaking down each sequence with exact timestamps:

Structured prompting isn't stream-of-consciousness. It's as much a craft as lighting a scene or choosing a lens. It's what separates work that holds together from work that falls apart.

When Veo started hallucinating, we went back to the structure. Tightened prompts. Regenerated. Waited. Tried again. Eventually, the system stabilized and we kept moving.

Here's what we learned in those frustrating hours: perfection is a trap.

AI defaults to an uncanny flawlessness that reads as artificial. So we made deliberate choices to reintroduce imperfection. We kept an unexpected camera move here, a slight imperfection in delivery there – because real cameras and real people do this. That's what made it feel real.

The Workflow

There's no one-stop shop for this work. Not yet. Our workflow:

Starting frames: Midjourney and Google Gemini (aka Nano Banana) with human fine-tuning in Adobe.

Voice: Professional clone in ElevenLabs to match Dimitri's actual voice.

Video generation: Veo-3.1 through Google Flow, frame-to-video in 6-8 second chunks using structured prompts.

Post-production: Adobe Premiere for editorial, pacing, sound design, and all the human decisions that turn assets into story.

For voice, we cloned Dimitri's actual speaking patterns and tone in ElevenLabs. For video, we generated dozens of takes of each moment, selected the best, then edited them together – running the audio from each video clip through ElevenLabs to transform the generic voice from Veo-3.1 into Dimitri’s voice. Adobe Premiere became where AI assets turned into an actual narrative.

Editorial rhythm didn't change. A-cam B-cam thinking didn't change. Knowing when to cut and when to hold – that didn't change. AI made the footage possible. The studio made it work.

The Human Truth

As the video came together, Dimitri added one more beat to the script – a nod to the collective (and very human) team who dreamt up and then created the video:

"Oh – and one last thing. This message you're hearing? This script – written with the help of AI. These visuals? Created with AI…but the idea, the creativity, that came from humans!"

He was right to add it. The video's job wasn't to showcase technology. It was to help Curi's team understand that as AI transforms workflows and client expectations, their competitive advantage stays the same: deep relationships built on trust, moment by moment.

Future Dimitri wasn't the hero. He was an invention that let the real Dimitri talk about what matters in a way people would remember.

The Real Math

The project took a month. Most of the work happened up front: strategy, concept development, creative direction, building starting frames, writing the script, designing a workflow customized to this specific deliverable. Then a week of heads-down production that all that prep enabled.

Hard costs were less than a traditional shoot. No cameras, crews, locations, equipment rentals. But it was still time intensive. This is not the fast, cheap solution many assume AI to be. It takes experienced people, time, and constant steering to make it work.

We reduced costs. We didn't eliminate them. And this approach doesn't work for everything. It worked here because of the nature of the assignment: a controlled environment, a future scenario, a creative concept that embraced what AI does well. There's no magic shift to replacing authentic storytelling.

In fact, as AI content floods the market, there's a strong argument for the rising value of "real" – the value of less, more strategic, more thoughtful, more creative work as the actual driver of differentiation.

What This Unlocks

Here's the part worth paying attention to: AI doesn't just make production cheaper or faster. It makes things possible that weren't possible before, at least not without massive budgets and VFX teams.

For the Curi video, we didn't just put Dimitri in a room talking to camera. We put him in a sleek penthouse office overlooking a futuristic Chicago skyline. We added flying cars gliding past the windows mid-sentence. We art-directed and brought to life cutaway sequences that painted this version of the future in a fun, engaging way.

In traditional production, that's a multi-location shoot with green screen, extensive post-production, CGI artists, and a budget that would make most internal communications teams laugh and close the deck. Here, it was concepting, art direction, and iteration.

We used that freedom deliberately. The cutaways gave the video pacing and visual variety, taking it from a traditional talking head format to something that felt dynamic, engaging, immersive.

This is where AI production gets interesting. Not in replacing what cameras do well, but in enabling what cameras can't easily do. World-building. Impossible perspectives. Scenarios that would be cost-prohibitive to capture traditionally.

The constraint isn't budget anymore. It's imagination and the discipline to execute it well.

What Hasn't Changed

Story still means the same thing it always has.

People still know when something's real. They still reward clarity. They still tune out work that's flashy but hollow. The craft is just more visible now, because when everyone has access to the same generative AI tools, what separates good work from noise is taste, judgment, and the willingness to iterate until it's right.

We're at a strange moment where brands can make almost anything, but that abundance doesn't make the hard parts easier. It makes them more important:

You still have to know what you're trying to say. (A clear strategy.)

You still have to say it clearly. (A compelling creative idea.)

You still have to make people feel something. (Great storytelling.)

You still have to execute with craft. (Dialed-in production.)

AI didn't change any of that. It just changed what execution looks like.

For Curi Capital, that meant using the newest possible technology to reinforce the oldest possible truth: in a business built on trust, the human part isn't optional. It's the entire point.

We spent a month making Future Dimitri say exactly that.

And when present-day Dimitri stood in front of his team and revealed that the creativity behind what they'd just watched came from humans, the technology disappeared and the message remained.

That's when we knew it worked.

Greenhouse Studios is the content studio within Greenhouse Partners, an award-winning brand strategy and advertising firm based in Boulder, Colorado. We help brands tell stories that matter, with whatever tools make sense for the work.

FAQs

1) How do you create AI videos that look professional?

You build them like a production: concept → storyboard → starting frames → video generation in scenes → edit. The biggest difference from “AI demo videos” is discipline. You lock continuity (face, lighting, set) and cut the result like real footage, not like a novelty clip.

2) What’s the biggest mistake brands make when trying AI video?

Assuming it’s “prompt in, finished video out.” Most quality issues come from inconsistency. Faces change, backgrounds drift, odd gestures appear, and each generation becomes a new version of reality. Planning for continuity upfront beats trying to fix chaos later.

3) What tools are best for AI video generation?

There isn’t a single “best” stack. The landscape changes fast, and the right tools depend on your goals, such as realism vs stylization, whether you need a real person’s likeness, how much control you need, and how the final piece will be edited.

For the Curi “Future Dimitri” video described in this article, we used a toolchain approach:

Midjourney and Google Gemini image editing (“Nano Banana”) to create and refine starting frames

Adobe tools for finishing touches and continuity cleanup

Google Flow / Veo for frame-to-video generation in short shot-length chunks

ElevenLabs for voice cloning and performance consistency

Adobe Premiere for editing, pacing, sound, and final assembly

The key is matching tools to the creative concept and production needs, then building a workflow that keeps continuity and quality under control.

4) How do you keep AI video consistent across multiple scenes?

You combine starting frames, structured prompts, short generations, and editorial selection. Consistency isn’t something you “ask for” once. It’s something you produce with guardrails and iteration.

5) Is AI video production actually faster or cheaper than traditional production?

Often cheaper in hard costs (crew, locations, travel), but not instant. Time shifts into planning, iteration, and post-production judgment. AI can reduce certain costs, but it doesn’t remove the need for craft.

6) What kind of work does Greenhouse Studios do?

We’re a full-service content studio. We produce branded content, short- and long-form video series, documentary and unscripted storytelling, and social-first content. We also build AI-enabled workflows when the creative idea benefits from it. You can check out some of our work here. If you’ve got a story to tell, we’d love to talk.

8) Where is Greenhouse Studios located, and do you work with clients outside Colorado?

We’re based in Boulder, Colorado, and we work with brands, agencies, and partners across the United States and internationally. Our productions are often multi-location, and we create content built for modern, multi-channel distribution.

9) How can I contact Greenhouse Studios to discuss a project?

Use the form below to tell us about your project. We’d love to talk!